Let’s look at some common misconceptions about performance testing :

“It’s only needed for big applications.”

Even smaller apps and startups need performance testing. Users today expect seamless experiences, regardless of scale. Slow performance is one of the top reasons people abandon apps and websites, which can derail business plans.

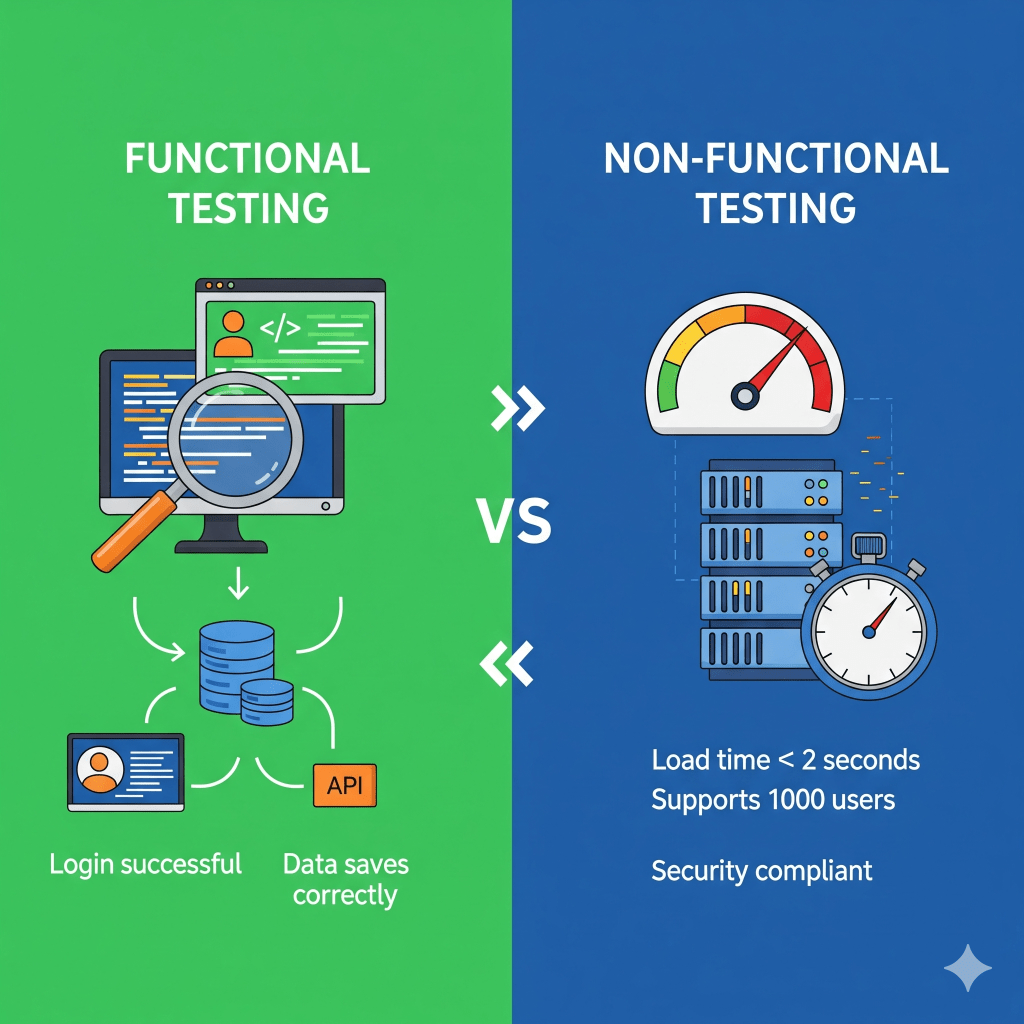

“I did unit testing, system testing, and user acceptance testing. None of them complained. That is enough.”

Unit, system, and UAT focus on functionality. Functional tests confirm features work correctly, but they don’t reveal how the system behaves under heavy traffic. An application can pass every functional test and still fail miserably in production.

Take J.Crew’s Black Friday sale in 2018—their site passed all functional checks but went down during peak holiday traffic, costing them millions in sales.

“If it works on my machine (lower environment), it will work in production.”

This is the classic response I’ve received from stakeholders—as if end users are going to use their machines. Development environments are usually more controlled and less demanding. Real-world conditions like network latency, concurrent usage, or large data volumes expose issues that never show up in lower environments.

One striking case: Knight Capital (2012)—a glitch in production (not seen in test environments) led to $440 million in losses in just 30 minutes.

“It’s too expensive and time-consuming.”

Yes, performance testing takes effort. But calling it “too expensive” ignores the bigger cost of outages, angry customers, and lost revenue. In 2017, British Airways faced a major IT failure that grounded flights worldwide. The estimated loss? Over $100 million. Compared to that, investing in performance testing feels cheap.

“Performance testing tools alone will solve the problem.”

Tools like JMeter, LoadRunner, or Neoload are powerful—but they don’t fix issues by themselves. The real value comes from designing meaningful tests, interpreting results, and collaborating with developers, architects, and DevOps to resolve bottlenecks. Data without context is just noise.

“Performance testing is just about speed.”

Many assume it’s only about making the application run faster. In reality, performance testing covers stability, scalability, resource utilization, and reliability—not just raw speed. An app might load quickly with 10 users but collapse with 1,000 if scalability isn’t tested.

Example: during Pokemon Go’s launch in 2016, the app was snappy for small groups of beta testers, but it buckled under millions of players worldwide, frustrating fans globally.

“We can do performance testing at the end of the project.”

This is the most common myth. Leaving it to the end means performance bottlenecks might be buried deep in the architecture, making them very expensive to fix. Ideally, performance testing should be part of performance engineering—done throughout development.

Think of Ticketmaster’s frequent concert ticket crashes (like Taylor Swift’s Eras Tour in 2022). The failure wasn’t discovered until launch day, but by then it was too late—and it made headlines.

“Performance testing is the same as load testing.”

Load testing is just one type of performance test. Others include stress testing, spike testing, endurance testing, and scalability testing. Each reveals different aspects of system behavior. Ignoring them is like looking at only one piece of the puzzle. A famous example: in 2013, Healthcare.gov crashed during its launch due to untested loads, preventing millions of Americans from signing up for healthcare. It wasn’t a “big app” in size—but it was big in impact.

Leave a comment